Inflection AI has raised $1.3bn (€1.2bn) from investors that include Microsoft and Nvidia to build an AI supercomputer cluster using 22,000 of the latest Nvidia GPU chips.

More AMD AI software info. Level1Techs in general goes deeper technically than others.

Some notes on how the future of AI has not been written yet. A lot of new breakthroughs coming through.

They have their own CPU/GPUs? Because that is what this thread is about.

Bergamo launch yesterday.

The following video is not just about performance… But the video also details the advantage of chiplets that lets AMD make new processors at faster speed.

Benchmarks in linux

https://www.phoronix.com/review/amd-epyc-9754-bergamo

Across all of these benchmarks carried out, the EPYC 9754 2P on average had a 385 Watt power draw… In comparison the EPYC 9654 2P had a 447 Watt average and the EPYC 9684X 2P had a 464 Watt average. And need we mention the Xeon Platinum 8490H 60-core processor consuming even more power with a 568 Watt average. The EPYC 9754 power consumption results surpassed my expectations in frankly not expecting Zen 4C to deliver such power efficiency improvements while still performing so well.

To me, the impact of the AMD EPYC Bergamo is hard to understate. An organization can deploy mainstream 32-core and 64-core Genoa parts for mainstream x86 applications. Specialized 16-core Genoa-X SKUs with 48MB of L3 cache per core or 96 core parts with 1.1GB of L3 cache per core can be added to a cluster for extreme software licensing or HPC workloads. For the sea of 4 and 8 vCPU VMs the AMD EPYC 7954 provides a meaningful upgrade in terms of density and lowering the cost per VM from a power and footprint standpoint. That portfolio all runs the same Zen 4 flavor of ISA which is different than Intel’s P-core and E-cores today, and especially swapping to Arm.

As the market’s attention is focusing on AMD’s confirmation on having TSMC produce its latest Instinct MI300 series generative AI accelerator to be released in the fourth quarter of the year, AMD CEO Lisa Su disclosed that AMD’s server market share has continued to progress and has exceeded 25%.

When answering a DIGITIMES reporter’s question at a recent press conference in Taipei, Su said, “We did make good progress on our CPU business. I actually think our server market share is higher than 20%, … should be over 25%.”

That means AMD’s performance has surpassed the DIGITIMES Research analyst’s previous estimate. DIGITIMES analyst focusing primarily on the server industry anticipates that AMD’s share will well stand above 20% in 2023, while Arm will get 8%.

That implies another jump of 7-8 percentage points from the end of 2022. According to Mercury Research, AMD’s total market share grew from 10.7% at the start of 2022 to 17.6% at the end of the year, while Intel fell from 89.3% at the start of the year to 82.4%. AMD’s total share of the CPU market (excluding IoT and custom silicon) rose from 23.3% in 2021 to 29.6%, while Intel’s share fell from 76.7% in 2021 to 70.4% in 2022.

Your post belongs here

Nvidia published Q2 results

- Record revenue of $13.51 billion, up 88% from Q1, up 101% from year ago

- Record Data Center revenue of $10.32 billion, up 141% from Q1, up 171% from year ago

“Our entire data center family of products — H100, Grace CPU, Grace Hopper Superchip, NVLink, Quantum 400 InfiniBand and BlueField-3 DPU — is in production. We are significantly increasing our supply to meet surging demand for them,”

In my view, many companies are looking for alternative provider considering supply and demand issues, pricing issues, hopefully AMD will catchup and grab some share. I see AMD is the ‘only viable alternative’ to Nvidia’s AI chips but it’s not there yet!

My simple way of looking at the valuations based EV/Sales(TTM)

Nvidia 35.5x

AMD 7.36x

Intel 2.98x

Industry avg: 4x

Intel: A risky buy, as future growth is not yet visible.

AMD: Downside is limited and there is huge upside potential if things work as expected from Q4 with MI300.

Nvidia: Downside is possible, but upside is still reasonable in the long run. Although it looks overvalued, I believe the downside is limited given the demand for H100 and they also future ready with autonomous automobile pipeline.

Disc: Already have a small position in Nvidia(at 153), planning to take a position in AMD(I feel there is nothing much to lose here! and can wait for an year without any expectation)

I have also moved some money to nvidia every time it falls.

nVidia is making certain investments that are indicative of a strategy.

Note “NVIDIA CSP program”. Yes. Nvidia is moving to cloud services. How? By investing/partnering in companies that are showing the most promise/capability.

CoreWeave today announced that it raised $221 million in a Series B funding round led by Magnetar Capital with participation from Nvidia, former GitHub CEO Nat Friedman and ex-Apple exec Daniel Gross. Magnetar contributed $111 million, with the remainder of the investment being split between Nvidia, Friedman and Gross. An Nvidia spokesperson said that the investment represents a “deepening” of its partnership with CoreWeave.

nVidia is playing the kingmaker. “API is ready. No one else has it. Build on it. You get sure shot market + preferential treatment from me will help you against large CSPs”.

This is basically creating a market for nVidia AI services. They get to make > 70% gross margins from their H100 machines and then they invest in upstream companies and get a share in their pie too (forward integration is the term?). What this also does is when the subscription money kicks in, the cyclicality of nvidia semi biz is taken care of. This part is something even Ashwatch Damodaran did not talk about in his nVidia valuation. Atleast not specifically though. He did mention he is confident nvidia will make the next technology leap when everything else it is doing appears saturated with many players.

Nvidia is known for its stranglehold over the market for the data center chips that power ChatGPT and other artificial intelligence software. But in a matter of a few months, Nvidia has also become one of the biggest venture capital investors in an important class of customers who need its chips: cloud and AI software startups.

They are moving at blitzing pace. They are far ahead of everyone else. In 2013, they brought in volta with tensor cores. We are seeing the impact now after 9 years. They are moving to “premium AI services” today. Competition is AWS/Google/Meta. They are customers too!

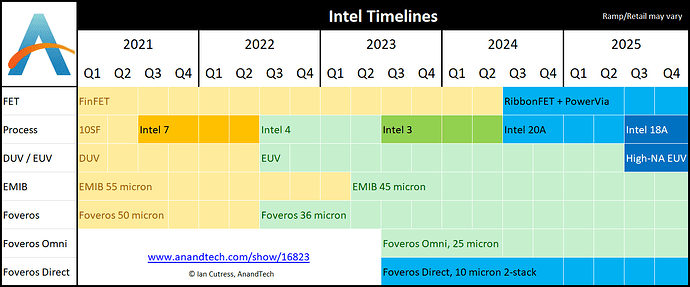

Regarding intel. The only thing that needs to be monitored, IMHO, is their foundry. Intel 4. It would be a good bet to buy some intel if their intel 4 products come out this year. Which means one thing, their foundry is working fine. And the second, most important one, is that their design team may have learned to live without help from their foundry to fix their problems (IDM on track and design has learned to manage by themselves). This is a big doubt. But can be a good time to enter.

Gemini is being trained on YouTube videos and usesTPU-v4 chips

Recent developments:

- Google Cloud and NVIDIA expanded their partnership to advance AI computing, software, and services. Google Cloud already had NVIDIA GPUs, and its PaxML framework, which was originally built to span multiple Google TPU accelerator slices, will now be optimized to enable developers to use NVIDIA® H100 and A100 Tensor Core GPUs.

- Tesla launched a new $300 million AI cluster for advanced computation. The system is equipped with 10,000 NVIDIA H100 GPUs.

- Tesla is also building a supercomputer named Dojo, which will work in tandem with the NVIDIA H100 GPU cluster for its Full Self-Driving (FSD) ambition. Elon Musk said,

“We’ll actually take Nvidia hardware as fast as Nvidia will deliver it to us,” and “And frankly, I don’t know, if they could deliver us enough GPUs, we might not need Dojo - but they can’t.”

- Samsung HBM3 memory and packaging technology will be utilized by AMD MI300X GPUs. NVIDIA has so far integrated HBM3 chips exclusively from SKhynix. Samsung is expected to supply ~30% of Nvidia’s HBM3 needs in 2024.

- Intel CEO Pat Gelsinger said that the company has received “a large customer prepay” for “18A” manufacturing capacity. This is a reference to the company’s development of 1.8 nanometer production lines, which will be used to produce cutting-edge chips. Gelsinger also made a statement that Intel will be “competing more for the GPU” market.

- Google Cloud released TPU v5e, which is suitable for midsize and large-scale AI training and inference workloads. It also announced the general availability (GA) of A3, which is powered by NVIDIA’s H100 Tensor Core GPUs.

Based on recent developments, AMD(MI300X), Google(TPU v5e), Amazon(Graviton), and Intel all are expected to produce GPUs in the next one to two years. Not sure about Meta and microsoft but Meta is building AI custom chips for metaverse ambition. These GPUs may not be as powerful as NVIDIA’s GPUs, but they should be good enough for small to medium-sized LLM training and inference workloads. By that time, NVIDIA will be able to capitalize on the demand for GPUs with huge margins and have a huge surplus that it can use to acquire other companies.

Nice update/summary @kondal_investor

The initial thesis of this thread, for me, was that amd is going to displace intel in the lucrative server space. Everything is now getting skewed because of AI spending is eating out cpu/gpu spending. Never imagined this would happen.

This sinks the infra CPU revenue for AMD this year I think. They are still guiding strong very likely because intel has no answer to amd tco advantage

“Amazon EC2 M7a instances, powered by 4th generation AMD EPYC processors, deliver up to 50% higher performance compared to M6a instances. These instances support AVX3-512, VNNI, and bfloat16, which enable support for more workloads, use Double Data Rate 5 (DDR5) memory to enable high-speed access to data in memory, and deliver 2.25x more memory bandwidth compared to M6a instances.”

Basically, it appears AMD is helping replace tonnes of old CPUs with new genoa/bergamo ones. If only AI had not made the capex re-allocation for all CSPs, this would have been a bumper year for AMD.

Regarding AMD AI effort - they are not really making a target only for Training. Their approach is broad (inference/edge etc). Offcourse that would be the way since they do not have anything that competes with nvda in training right now. Jean Hu mentoned clearly in Deutsche Bank 2023 Technology Conference, that they will get meaningful revenue via mi300x only in 2H2024. By then, we have no idea what the dynamics would be since nvidia would have gotten all the supply bookings they have done with TSMC. Meanwhile, their rocm development appears to be making great strides - Example: Just today - https://github.com/openai/triton/pull/1983. They are working hard.

As of now, AMD is not looking at some explosive growth. AI Poured water on all my predictions. Accordingly, I have decided to move some money to nvidia.

Edit: Talk about diversifying. It crossed my mind an year back to buy nvidia and amd together since one is for CPU and the other is for GPU. But somehow I decided following two companies would not be worth the effort etc. Infact, I was researching 3 companies anyway. Bad decision making from my side,.

Updates:

TSMC CoWoS ramup

- Liu noted that it is not due to TSMC’s manufacturing capacity but rather the sudden threefold increase in CoWoS (Chip-on-Wafer-on-Substrate) demand. TSMC will continue to support the demand in the short term but cannot immediately ramp up production. Liu estimated that TSMC’s capacity will catch up with customer demand in about a year and a half, considering the capacity bottleneck as a short-term phenomenon.

Nvidia sold out well into 2024

- Demand for Nvidia’s flagship H100 compute GPU is so high that they are sold out well into 2024, the FT reports. The company intends to increase production of its GH100 processors by at least threefold, the business site claims, citing three individuals familiar with Nvidia’s plans. The projected H100 shipments for 2024 range between 1.5 million and 2 million, marking a significant rise from the anticipated 500,000 units this year.

Broadcom is the second largest AI chip company - stock to watchout for!

-

Broadcom is the second largest AI chip company in the world in terms of revenue behind NVIDIA, with multiple billions of dollars of accelerator sales. This is primarily driven by Google’s aggressive TPU ramp as part of its self-described “Code Red” in response to the Microsoft + OpenAI alliance that is challenging Google’s world leadership in AI.

-

Meta also makes their in-house AI chips with Broadcom

Intel Foundry

- Intel plans to overtake Samsung and TSMC with its 1.8nm chips by 2025 Samsung is the second-biggest semiconductor chip fabrication company in the world after TSMC

- Intel Foundry Services and Tower Semiconductor Announce New US Foundry Agreement where Intel will provide foundry services and 300mm manufacturing capacity to help Tower serve its customers globally

Intel is to pay termination free of 353M$ and then tower is now investing 300M$. ![]() Convenient.

Convenient.

Regarding intel fab:

- Did you know that once these high end wafers are ready in arizona fab, they will have to be shipped to TSMC for packaging?

… since CoWoS is done in taiwan.

… since CoWoS is done in taiwan. - Intel has booked 3nm capcity at tsmc - This jugglery of “we will have our own american fab” vs “we are booking tsmc fabs for ourself” reminds me of another jugglery Pat Gelsinger did sometime back - “We will get tower aquisition approval from china” vs “sabre rattling china threat”. We know how this went. Intel Books Two 3 nm Processor Orders at TSMC Manufacturing Facilities | TechPowerUp

Intel is on a short runway by itself… chips act money is not enough as the amount is not exclusive to intel.

- Has to execute on the nodes (a single miss and you are gone) -

2021

Intel's Process Roadmap to 2025: with 4nm, 3nm, 20A and 18A?!

2023

Intel: Meteor Lake & Intel 4 Process Now Ramping for Production

Till now, we are yet to see a product with intel 4. Expected time is 2H23 as told in the earnings calls. Not to mention they need customers for their high capex foundry.

Be careful with this one - 2025 is when they gain parity as mentioned by Their CFO in a recent analyst call. Only positive is the government thinks it cannot be let to fall. Then it may force american cos to order in intel fab. But what could be the possible timeline for this one?

- Design team has to show it can work without help from foundry (IDM 2)

As of now, no indication of anything.

That’s interesting! Thanks for the deeper insights @kenshin

Any developments on CoWoS for AMD? TSMC is fully occupied with NVIDIA orders I believe, probably samsung?

Xilinx has CoWoS bookings.

If the AI demand is compelling, Amd Can tap some from xilinx. Xilinx biz is also projected for a downtrend in last earnings.

Actually nvidia does not occupy 100% CoWoS