2023 is going to be the year AMD can make as much money as it can as long as macro allows.

Competition and alternatives knocking on nvidia’s door. It will be a long journey and the industry itself is working on an alternative.

“The rest of this report will point out the specific hardware accelerator that has a huge win at Microsoft, as well as multiple companies’ hardware that is quickly being integrated into the PyTorch 2.0/OpenAI Trion software stack. Furthermore, it will share the opposing view as a defense of Nvidia’s moat/strength in the AI training market.”

Sample discussion explaining nvidia monopoly on ai due to cuda platform

Edit: Another example https://www.reddit.com/r/MachineLearning/comments/1040w4q/news_amd_instinct_mi300_apu_for_ai_and_hpc/

A good article on intel’s earnings…

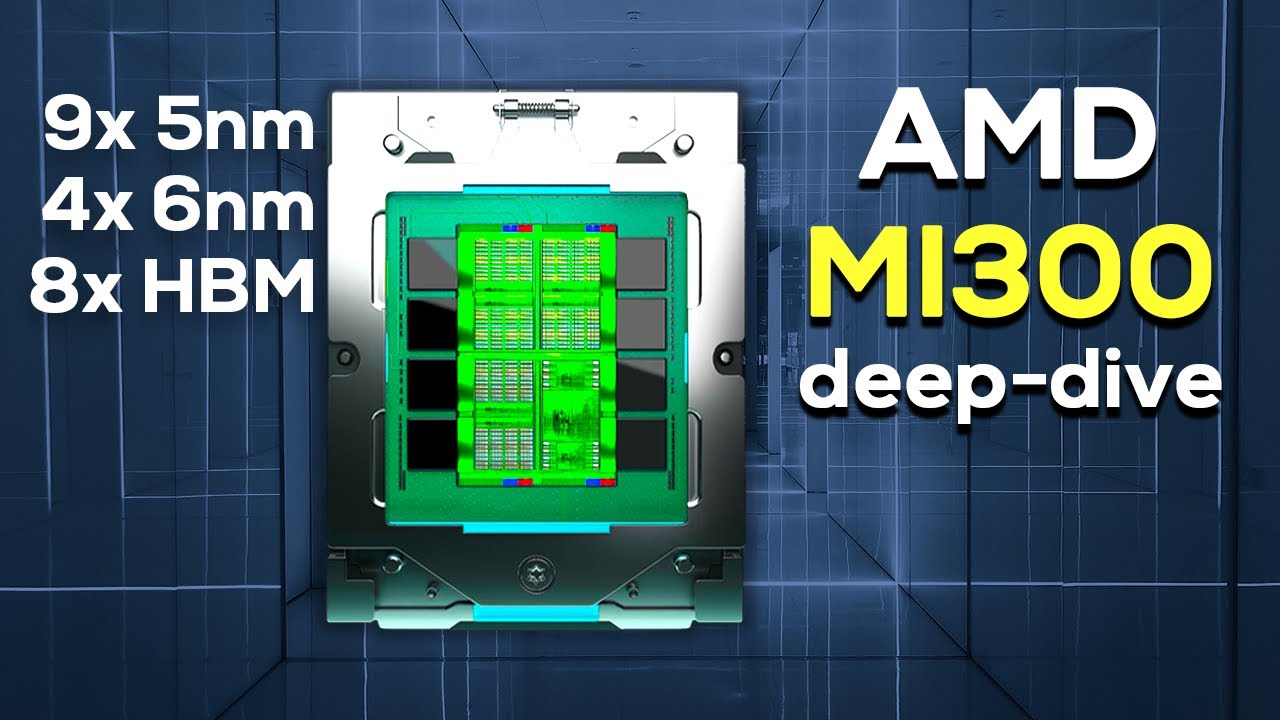

A good summary of what makes mi300 great. I look at it as a demonstrator of the future we are heading into.

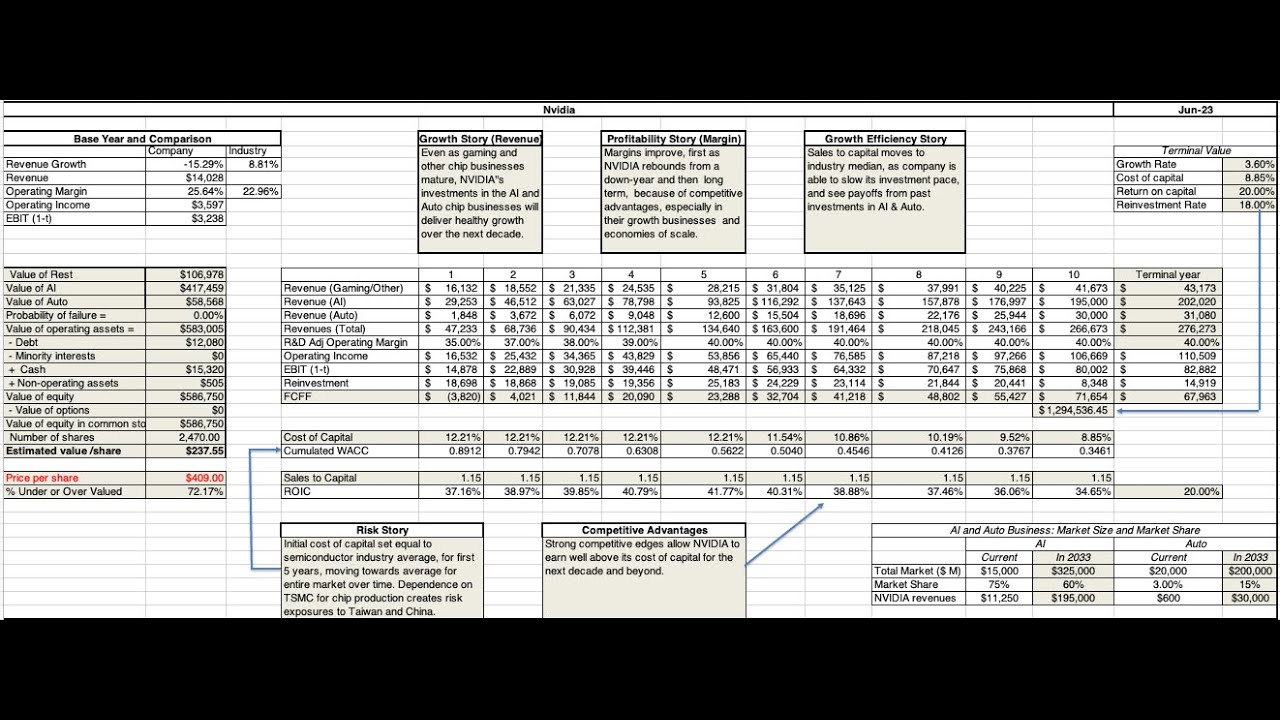

Generative AI will greatly benefit in increasing the revenue for NVIDIA and AMD, especially NVIDIA.

Their recent GTC 2023 was fully focused on that.

A lot of what nvidia does is hype their next technology. You got to give it to Jensen. Whatever he says, everyone parrots. A grade marketing + management. Heck even stocks run as soon as he says AI couple of times. In this case, he mentioned potential of ‘generative AI’ and every stock market analyst was parroting the same. It was actually chatgpt that set the ball rolling. Everyone, including lisa su and jensen are jumping on the bandwagon. Just an year back, jensen was hyping software generated by AI.

Their main advantage is their software platform which will be difficult to break. Luckily for other cos, TAM is big and software effort is being done by the industry to look for alternative hardware. Like what PyTorch 2.0 did.

OpenAI Triton only officially supports Nvidia GPUs today, but that is changing in the near future. Multiple other hardware vendors will be supported in the future, and this open-source project is gaining incredible steam. The ability for other hardware accelerators to integrate directly into the LLVM IR that is part of Triton dramatically reduces the time to build an AI compiler stack for a new piece of hardware.

A good summary is here

Nvidia’s colossal software organization lacked the foresight to take their massive advantage in ML hardware and software and become the default compiler for machine learning. Their lack of focus on usability is what enabled outsiders at OpenAI and Meta to create a software stack that is portable to other hardware. Why aren’t they the one building a « simplified » CUDA like Triton for ML researchers? Stuff like Flash Attention, why does it come out of Ph.D. students and not Nvidia?

Interesting development in Taiwan Semiconductors. Warren Buffett selling all of his stake and US Senators buying Puts on this company.

https://twitter.com/WallStreetSilv/status/1646496537504096259?s=20

Good intro article for understanding how difficult it is penetrate data center market for CPU makers.

Hey! Long time.

Decided to update here because the whole game has been split open by chatgpt + what is going on in ARM world.

-

The latest nvda (blowout) earnings + earnings call is a must for anyone interested in this space. NVIDIA Announces Financial Results for First Quarter Fiscal 2024 | NVIDIA Newsroom.

-

Not to mention the guidance. Nvidia guidance shocks Wall Street with A.I. boom in sight | Fortune.

-

They are selling H100 by tonnes it appears. Everyone is lining up to buy from them.

-

TSMC is seeing spike in utilization just by this demand. Yes, that is what the gold rush is looking like. https://www.marketwatch.com/story/tsmc-stock-outpaces-chips-on-report-nvidia-ai-order-boosted-capacity-use-81f76f26

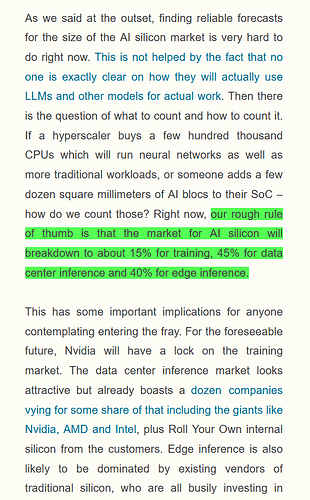

What is happening in the market?

- Datacenter spending is being restructured to make way for compute GPUs (specifically nvidia GPUs since there is no competition right now). https://twitter.com/EricJhonsa/status/1669103407142174720 - mizuho report

- Point 1) has reduced the CPU DC TAM at least in the short term. Ballpark 2 CPUs for 8 GPUs in AI boards.

- I am not convinced there is an AI bubble since companies are showing the money. Bubble may be just forming now in stocks.

- Nvidia is like the only ‘arms supplier’ for large models training.

- ARM is making inroads like never before - we are seeing good arm earnings as mentioned in last quarter softbank earnings - Looks like large license wins. This affects AMD. https://group.softbank/system/files/pdf/ir/financials/financial_reports/financial-report_q4fy2022_01_en.pdf

- Waiting on AMD AI show tonight to see if AI can take some market in AI training space with MI300. https://www.amd.com/en/solutions/data-center/data-center-ai-premiere.html

I was never good at valuation. The only valuation I ever knew was AMD was undervalued when I started this thread in comparison to other companies. I cannot value anything standalone.

Not yet sold out my shares in AMD.

- In my book, AMD is the only company that is in a position to take some training market. Even 10% would make a big difference to their revenue.

- They are working on their software a lot. I see active commits to their rocm repository in github etc etc. https://github.com/RadeonOpenCompute/ROCm.

- They released a new documentation site last week AMD ROCm™ Documentation — ROCm Documentation Home.

- They have formed a new vertical under Victor Peng (ceo of former xilinx) for the AI market.

- Nvidia is a solid case for accumulation/SIP long term

Good analysis.

How are Indian IT companies poised to capture this AI growth

Hi @kenshin Thanks a lot for the insights. Would you mind giving some analysis on the AMD valuation. As you rightly mentioned, this time AI boom is real and I still see only NVIDIA and AMD up for this. Intel is no where close to it to grap some market.

Apart from AI, in a year or two I see big developments coming up in auto industry especially self driving cars(NVIDIA has $14 billion pipeline over the next six years). Again, this will push the chip market.

My 2 cents. I feel Indian listed companies are no where in this space apart from moderinizng applications based on GPT models. This could be based on chatGPT API or other LLMs. So it’s business asusual for them and they will keep growing at 10-20% rate.

As I said, I am not good at valuation. I have seen various sites give forward pe calculation for amd at 18 - 37.

I see the above as estimation based on past. Without considering future new market potential just grabbing intel DC market share. say yearly DC revenue of 6B$. This is DC CPU alone. Now add AI product sales to the mix. How?

AMD showed Current oppurtunity as 25B$ dollars in 2023 and in 5 years opportunity at conservative at 80B$ to 150B$ at growth rate of 50% each year (as was mentioned by amd ceo (https://images.anandtech.com/doci/18916/42300421.jpg) . Yeah they took the aggressive estimate. But that is what nvidia earnings are pointing to…next quarter guidance is 65% up YoY sales.

Now, considering instinct processors ramp and supply is starting this year end. Next year oppurtunity is 36B$. Say even 10% sales lands with amd next year. We are looking at 4B$ sales.

This is a big jump for DC revenue currently standing at 6B$ annually. Not to mention ceo has said double digit growth this year. Considering how strong AMD’s DC CPU products are this year and next year, we can say 6B$ is conservative without including DC AI.

So where am I going with this? I am fine if AMD can get 15-20% of AI DC market by 2025, that would be amazing.15-20% DC AI market of potentially 50B$ market in 2025 is pretty good for AMD. You can make your valuations on top of this number.

We will have clarity of AI DC AMD product sales by Q42023. But every single nvidia customer is looking for alternatives. The margins are 70-80%. msft/amazon/meta/google - all are potential customers for MI300.

Regarding AMD DC outlook (on top of conservative 6B$ estimate), look at what AMD is offering in DC CPU. I will not be surprised 40% DCCPU market share by 2024 end. They are bringing the fight to ARM Collective’s cloud specific offerings while thumping intel. Meta is already a customer.

I would love to hear your valuation based on all this I wrote down. Some more non amatuerish valuation would help

Training will ‘finally’ taper down. The margins are mouth watering as of now. Any comments on the valuation of nvidia and amd?

Most analysts IMHO Falsely bin AMD along with intel or even nVIDIA. Which neglects a fundamental difference. AMD is the only company running a successful GPU business along with its CPU business. All AI training processors we see are an outgrowth of nvidia’s GPU business. The general purpose programmability of the GPU lends to this.

As of this day, we can say AMD training biz is negligible/nonexistant . The future of AMD is a bet on training too. I am not saying this will happen. Only saying these analysts are missing the potential + ignoring the fact that AMD is targeting the training market. Intel is nowhere here at least till 2025. They are currently selling some paltry low end GPUs.

AMD already has a foot in AI training market via supercomputers.

They mention their goals - “30x Increase in energy efficiency for AMD processors and accelerators powering servers for AI-training and HPC (2020-2025).3”

This was elucidated by Lisa Su in ISSCC 2023

Apart from this:

- They already have a play in inference with their v70 cards - AMD Data Center & AI Technology Premiere Replay - YouTube - This is one of the world’s largest hedge fund coming on stage and mention 35% improvement in inference performance. I personally think we cannot ignore what these guys say. The microsecond speed difference directly affects their bottom-line. And these guys operate in regulated market.

- They have the edge play too - How? Xilinx - This is one big chunk of biz that is currently carrying AMD through the tough times with client biz. Most analysts miss this giant inside AMD. AMD is actually two big companies working in tandem.

Finally some really positive news from AMD AI software front:

https://rocm.docs.amd.com/en/docs-5.6.0/release.html

- Ongoing software enhancements for LLMs, ensuring full compliance with the HuggingFace unit test suite

- OpenAI Triton, CuPy, HIP Graph support, and many other library performance enhancements

- Improved ROCm deployment and development tools, including CPU-GPU (rocGDB) debugger, profiler, and docker containers

Release Notes — ROCm 5.6.0 Documentation Home

With the latest PyTorch 2.0, MosaicML releases, AMD hardware is now just as easy to use as Nvidia hardware.

MI300 is ramping only Q4 2023.

Near term, it does not look like any competition to nVidia 2023/2024. They have given a revenue guidance of 12B$ Q2 @ 70% gross margins. Next year, they might release hoppernext. Which will just beat current H100 and MI300 in pure hardware performance. cuda will do the rest of the job. They will have all bases covered in 2024 with hopper and hoppernext. We are yet to even see nVidia actually battle on pricing with any competitor.

https://www.mosaicml.com/blog/amd-mi250

With the release of PyTorch 2.0 and ROCm 5.4, we are excited to announce that LLM training works out of the box on AMD datacenter GPUs, with zero code changes, and at high performance (144 TFLOP/s/GPU)! We are thrilled to see promising alternative options for AI hardware, and look forward to evaluating future devices and larger clusters soon.

With PyTorch 2.0 and ROCm 5.4+, LLM training works out of the box on AMD MI250 with zero code changes when running our LLM Foundry training stack.

Some highlights:

- LLM training was stable. With our highly deterministic LLM Foundry training stack, training an MPT-1B LLM model on AMD MI250 vs. NVIDIA A100 produced nearly identical loss curves when starting from the same checkpoint. We were even able to switch back and forth between AMD and NVIDIA in a single training run!

- Performance was competitive with our existing A100 systems. We profiled training throughput of MPT models from 1B to 13B parameters and found that the per-GPU throughput of MI250 was within 80% of the A100-40GB and within 73% of the A100-80GB. We expect this gap will close as AMD software improves.

- It all just works. No code changes were needed.

Today’s results are all measured on a single node of 4xMI250 GPUs, but we are actively working with hyperscalers to validate larger AMD GPU clusters, and we look forward to sharing those results soon! Overall our initial tests have shown that AMD has built an efficient and easy-to-use software + hardware stack that can compete head to head with NVIDIA’s.

This is grand, I am sold! CUDA monopoly will start waning and AMD will soon have the hardware for which everyone is dying for.

Contra point: LLM training is not for small players and the capability difference will get only bigger. Google and Azure will be (are?) the only ones who can afford to buy hardware required for training an LLM (not fine tuning).

I have personally used GCP’s vertex AI’s generative AI and its very simple and superb. I don’t think smaller companies should even think of buying all this hardware to ultimately train a relatively small LLM with limited capability instead of using GCP’s or Azure’s AI offering. I feel its a real possibility than in a year or two everybody realises this which will reduce the demand.

disc: have position in AMD since months, added recently.

Big news for AMD and Ampere(ARM). This could be a big enterprise gate opened. Though I suspect Ampere is ‘de facto’ because oracle has invested in Ampere. Ampere CEO is also in oracle board.

The article below gives a full rundown of oracle history with intel too.

Zero intel. Oracle X9M was with intel. They decided to hedge with AMD (mixing AMD and Intel in 2021) and now fully AMD.

Found this reference in https://www.reddit.com/r/AMD_Stock/. That sub is can get very general in discussion but saves me a lot of time researching because a lot of articles get shared every day.