This is not a stock analysis or recommendation.

I hope the administrators permit publishing the content as it delves into a deeper level discussion.

In today’s world, mostly everyone has access to one AI agent or another - you throw up a question and AI pushes back an answer and the string of query and response can continue to one’s satisfaction.

I tried the same today, with one particular stock, a random one, Cyient (disclosure: not invested, not following).

It started with a playful question and then I started probing further and at the end the complete thread was more than 10 pages and the AI agent not only gave results but proper justification. It read (says so) full 5 years Annual Report for answering to my queries.

Now if anyone had to do it manually, it would take days together - but the entire interaction was over in 10 minutes.

I have not gone through the each and every details for verification but on a superficial level, it matches with some of the figures that come up in Screener.

My real question is - can AI agent be trusted and if yes, it opens up a whole new pandoras box.

Cyient Stock Evaluation by AI Agent.pdf (245.2 KB)

2 Likes

It does bring many facts and data out which will take lots of time.

But there is a negative side too. Recently I bought a penny stock Unjha Formulations. Before buying, I fed the current annual report to Perplexity and asked the AI if there was anything suspicious about the sudden profits. It gave a one page reply, clearing the stock of my doubts.

Later, I went into the Annual Report myself. The second/third page itself revealed that the company had bought a crore worth of psylum from another company…owned by one of the directors of Unjha. I hastily sold the stock.

1 Like

I laboured with the AI asking queries which we try to do it ourselves to find a right stock. The datapoints shared were somewhat agreeable, even the Investor call details were true and this leads to the bigger question - if there is a 50% change of it being right, there would be hundreds of “experts” cropping out of every corner based on feed from AI - this is what scares me.

What is worrying for me is the AI missing such a glaring red-flag. Even then, I feel it is alright to run our feelings by some AI app for ‘another view’, if not exactly for a verdict.

As for the apprehension that this will give rise to experts mushrooming, how sure we can be that that many ‘experts’ are not already relying upon it.

One thing I am sure of is: AI can’t be fool-proof. In summarising Annual Reports and information from other sources, it is also likely to miss out on something important, or to overemphasise some less significant information.

Disclaimer: I am as ‘layman’ as they come as far as AI is concerned.

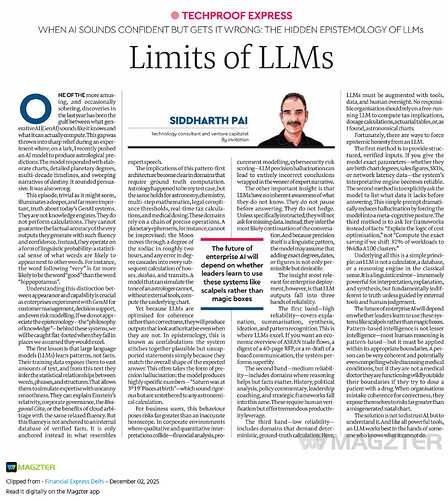

A very timely article on the limitations of LLMs

We must remember they are pattern recognition engines and not subject experts.

7 Likes

How to feed the AR to perplexity?

Just copy pasted it. It then reads it.

1 Like

In my view, Trusting AI will take time as Use Cases grow across industries(including finance) and Human in loop slowly moves away post validating for some period of time. Till then, we cannot trust the output blindly and will need to validate. Having said that, output based on pre-defined data/reports will be better than predictions of order book/growth/share price which may be hallucinated based on underlying data used by the LLM. Using same query across multiple LLM’s(Chat GPT, Grok, Gemini, Perplexity) is helpful to compare for such case.

I extensively use AI for understanding the businesses.

Here are some observations, cautions & key-take away’s:

1.) Pro model tends to perform way better than the free tiers for the same set of queries.

2.) When feeding pdf’s & screenshots, model with better OCR capabilities tend to parse information in more structured manner. ( Idea is to learn how to use OCR models, parse structured information from pdf’s & than feed to your respective agents. My experience says, AWS model is best for OCR, followed by gemini pro)

3.) A.I agents tends to hallucinate despite using their pro versions & latest models. It would make up information which it dont understand or couldn’t parse. Best way way to move around it is to have strict protocols & guardrails in your prompt so as to minimise the hallucination rate. Best way to affirm anything is to ask it again to cross-check by saying it looks doubtful and feeding the same queries to 2 models.

4.) With extensive usages, you would tend to know, which model works better for what kind of queries. something works better on Gemini, some on chat GPT, some on Notebook LM & something on perplexity.

5.) Going to-& fro with your prompting (you need to ask your agents why you made this-that error & how to make it error free as well as eliminate it next time), try to create a universal prompt for each agents. It would take time to perfect a prompt by trying to eliminate the errors. Once mastered for a specific usage, say analysis of "latest results ", it works wonders & efficiency is more than 90% every time, against generic prompts used. But again, for every different agents you will need to create different set of prompts & save it.

2 Likes

Adding to this, like stocks, we need to understand the value chain of AI & LM’s to extract best out of it.

To put it in technical terms,They use context stacks. Context engineering is the real meta.

Prompt engineering was a hack for the early days of AI like learning to talk to a foreigner using short phrases and keywords.

But today’s models don’t just understand instructions. They understand environments.

Your job isn’t to “prompt” the model.

It’s to architect its context.

Think of context as a digital environment you build around the model before it ever starts generating.

You define:

• Who it should “be” (role/persona)

• What it’s trying to achieve (goals)

• How it should communicate (tone/style)

• What to reference (examples, data, past work)

This is what drives consistent, on-brand, high-quality output.

The model is no longer a “tool” you use. It’s literally a team member you onboard…

And like any great hire, it performs best when it understands your brand, your goals, and your expectations not when you send some random instructions.

That’s context engineering.

2 Likes

I have used Notebook LLM, manuallly uploaded all the files and also addes the source as a websearch and it was great to get the details in a structured manner.

Also screener AI is the best of alll till now i have seen. All other LLMs, either they generalize or assume or they make it higher level discussions. But Screener is replying on the spot for the question but its expensive.