Second part was not for you, but its a point i have come across often across twitter, etc. Yes we are in agreement. Also not sure if i can totallh trust what the Chinese say with regard to Deepseek … the chinese are not very IP friendly and they have their ways

If there is a single technology America needs to bring about the “thrilling new era of national success" that President Donald Trump promised in his inauguration speech, it is generative artificial intelligence. At the very least, ai will add to the next decade’s productivity gains, fuelling economic growth. At the most, it will power humanity through a transformation comparable to the Industrial Revolution.

Mr Trump’s hosting the next day of the launch of “the largest AI infrastructure project in history" shows he grasps the potential. But so does the rest of the world—and most of all, China. Even as Mr Trump was giving his inaugural oration, a Chinese firm released the latest impressive large language model (LLM). Suddenly, America’s lead over China in AI looks smaller than at any time since ChatGPT became famous.

China’s catch-up is startling because it had been so far behind—and because America had set out to slow it down. Joe Biden’s administration feared that advanced ai could secure the Chinese Communist Party (CCP) military supremacy. So America has curtailed exports to China of the best chips for training ai and cut off China’s access to many of the machines needed to make substitutes. Behind its protective wall, Silicon Valley has swaggered. Chinese researchers devour American papers on ai; Americans have rarely returned the compliment.

Yet China’s most recent progress is upending the industry and embarrassing American policymakers. The success of the Chinese models, combined with industry-wide changes, could turn the economics of AI on its head. America must prepare for a world in which Chinese AI is breathing down its neck.

China’s LLMs are not the very best. But they are far cheaper to make. QwQ, owned by Alibaba, an e-commerce giant, was launched in November and is less than three months behind America’s top models. DeepSeek, whose creator was spun out of an investment firm, ranks seventh by one benchmark. It was apparently trained using 2,000 second-rate chips—versus 16,000 first-class chips for Meta’s model, which DeepSeek beats on some rankings. The cost of training an American LLM is tens of millions of dollars and rising. DeepSeek’s owner says it spent under $6m.

American firms can copy DeepSeek’s techniques if they want to, because its model is open-source. But cheap training will change the industry at the same time as model design is evolving. China’s inauguration-day release was DeepSeek’s “reasoning" model, designed to compete with a state-of-the-art offering by OpenAI. These models talk to themselves before answering a query. This “thinking" produces a better answer, but it also uses more electricity. As the quality of output goes up, the costs mount.

The result is that, just as China has brought down the fixed cost of building models, so the marginal cost of querying them is going up. If those two trends continue, the economics of the tech industry would invert. In web search and social networking, replicating a giant incumbent like Google involved enormous fixed costs of investment and the capacity to bear huge losses. But the cost per search was infinitesimal. This—and the network effects inherent to many web technologies—made such markets winner-takes-all.

If good-enough AI models can be trained relatively cheaply, then models will proliferate, especially as many countries are desperate to have their own. And a high cost-per-query may likewise encourage more built-for-purpose models that yield efficient, specialised answers with minimal querying.

The other consequence of China’s breakthrough is that America faces asymmetric competition. It is now clear that China will innovate around obstacles such as a lack of the best chips, whether by efficiency gains or by compensating for an absence of high-quality hardware with more quantity. China’s homegrown chips are getting better, including those designed by Huawei, a technology firm that a generation ago achieved widespread adoption of its telecoms equipment with a cheap-and-cheerful approach.

If China stays close to the frontier, it could be the first to make the leap to superintelligence. Should that happen, it might gain more than just a military advantage. In a superintelligence scenario, winner-takes-all dynamics may suddenly reassert themselves. Even if the industry stays on today’s track, the widespread adoption of Chinese AI around the world could give the ccp enormous political influence, at least as worrying as the propaganda threat posed by TikTok, a Chinese-owned video-sharing app whose future in America remains unclear.

What should Mr Trump do? His infrastructure announcement was a good start. America must clear legal obstacles to building data centres. It should also ensure that hiring foreign engineers is easy, and reform defence procurement to encourage the rapid adoption of ai.

Some argue that he should also repeal the chip-industry export bans. The Biden administration conceded that the ban failed to contain Chinese AI. Yet that does not mean it accomplished nothing. In the worst case, AI could be as deadly as nuclear weapons. America would never ship its adversaries the components for nukes, even if they had other ways of getting them. Chinese AI would surely be stronger still if it now regained easy access to the very best chips.

Agencies or agency

More important is to pare back Mr Biden’s draft “AI diffusion rule", which would govern which countries have access to American technology. This is designed to force other countries into America’s ai ecosystem, but the tech industry has argued that, by laying down red tape, it will do the opposite. With every Chinese advance, this objection becomes more credible. If America assumes that its technology is the only option for the likes of India or Indonesia, it risks overplaying its hand. Some tech whizzes promise the next innovation will once again put America far in front. Perhaps. But it would be dangerous to take America’s lead for granted.

Source- mint article

is this an attempt at damage limitation? same post she has done on linkedin and someone commented with some questions to which she said her team will get in contact and answer those. can we get those/such questions answered publicly instead of answering individually and privately? i too agree with comment made on linkedin… OTOH on X/twitter people are just venting their anger.

I suppose it is.

However, I don’t expect upper management to resolve queries like these on a public forum. It’s not a complaint like “my account is not working”. It’s a serious question on the company’s strategy and is essentially a request for information that is not in the public domain. Company executives cannot such information in a semi-formal environment. Not just because of the PR implication but legal and regulatory constraints as well.

The questions are totally valid though.

I’d give the company benefit for now for a few reasons.

- The company was completely silent and suddenly came out with a HUGE deal with L&T. They are patient and cautious.

- If you look in the history, they’ve suffered third degree burns with customer concentration in the part. The leadership hasn’t changed, so I will assume, for now, they won’t make the same mistake

- I’d rephrase the question from custom loss to customer revenue. As the primary cause as stated in concal was not customer attrition but rather end of training. Or they lied straight faced about it to protect their behinds.

- AI is cut throat and there’s no ethics when it comes to the domain as exemplified by the US ban on AI chips. Or when Sam Altman requested us congress to “regulate/control” AI innovation after establishing itself as the leader. If there’s public domain fight among states, there’s definitely private tug wars between corporates. Silence is golden here.

E2E is a huge wild card right now. Arguably the biggest one traded on Indian bourses right now.

exactly what i thought. But she says “my team will get in reach out to you and address your concerns”, which actually now raises concerns for me. Why tell that individual investor alone? Just file a disclosure with the exchanges and make that “address your concern” public.

Deepseek with their lower cost (allegedly), at par performance and free and open source models are already stealing that lead it seems… Lets see.

How reliable is the claim that deepseek has actually run gaming /legacy GPU to do their stuff ? Any independent corroboration apart from company released pdfs?

Secondly how trustworthy and therefore use worthy would be deepseek ?Is there no concern about data leak/user profiling etc. that is present for companies like TikTok ?

I am asking out of curiosity at this sudden trust in Chinese tech when the claims are pretty tall …

Don’t know about the first part. But as far as trust and safety etc. is concerned it’s an open source model which can be downloaded and run completely offline. So in my limited knowledge that should not be a concern at all.

I know it is a infrastructure order, however once infrastructure is built, the software needed to run these capacity, e2e can come into picture.

The tender results are anytime over the next 1 week

chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/https://www.meity.gov.in/writereaddata/files/Tendernotice_0.pdf

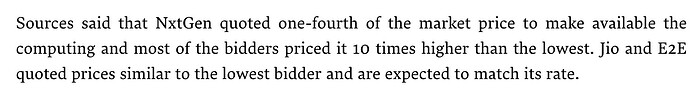

E2E Networks and NxtGen Datacenter and Cloud Technologies confirmed that they have been called for the financial bid opening.

After the opening of the bid, the L1 bidder will be announced, following which the other companies will be asked to offer compute-as-a-service at the price offered by the L1 bidder.

ET had reported on January 16 that Microsoft Azure has partnered with Mumbai-based Yotta Data Services for the bid while Amazon Web Services had partnered with CMS Computers, Locuz Enterprise Solutions, Orient Technologies and Vensysco Technologies.

Indian companies have an advantage over hyperscalers, said one of the technically qualified bidders to ET.

“Indian companies are already offering GPU-as-a-service at a price 40% to 50% lower than hyperscalers. Global hyperscalers cannot provide GPU-as-a-service in India at a price lower than their global price for just one bid,” said one of the bidders. “Once they publish their price in India, their global clients will start asking for the same price. Hence, in a race to the bottom (in terms of pricing) Indian companies will win.”

Any analysis of Deep seek impact on this company

Right now the AI community believes that with these efficiency gains there will be more people running and trying to train their LLMs, just because its so easy to do so now. This will benefit Nvidia and neo cloud providers like E2E and Core weave.

Microsoft and Meta will announce their earnings tonight 5:00 PM PST time. We will get to learn more about how they are looking at this.

Overall I think its extremely bullish for E2E networks and hardware manufactures like Nvidia.

YOTTA IS LOSS MAKING AND HAS VALUATIONS OF US$ 2.75 bn

According to the investor presentation, Yotta’s revenue increased from $22 million in FY23 to an estimated $49.2 million in FY24. Its net loss dropped from $53.2 million in FY23 to $52.8 million in FY24. The company estimates that its revenue will rise to $156 million in FY25, whereas its net loss will go up to $113.4 million. A capital expenditure of billions of dollars is projected during the financial year.

The five-year-old startup was recently in the limelight after it became the first Indian company to acquire AI chips from Nvidia in March. Yotta placed an order for 16,000 H100 chips, including the newly announced Blackwell AI-training GPU, in September 2023. The first batch of 4,000 chips arrived in March, comprising Nvidia H100 Tensor Core GPUs. The entire lot is contracted to customers.

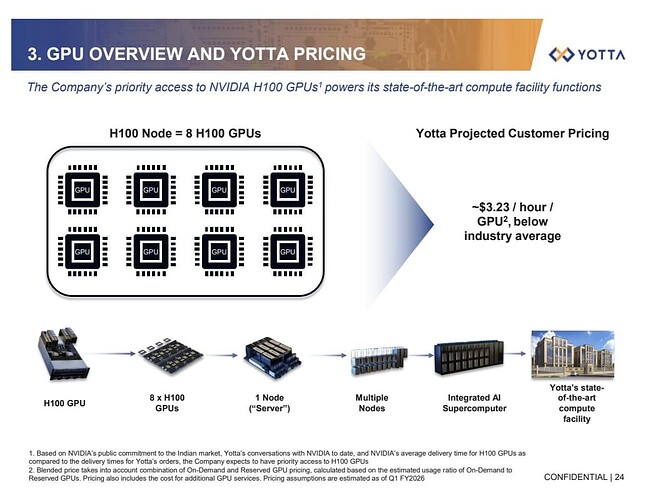

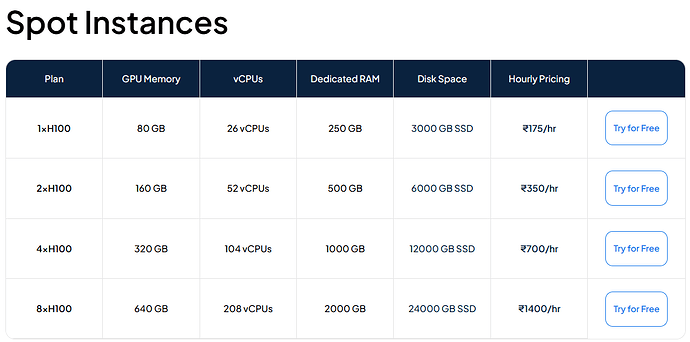

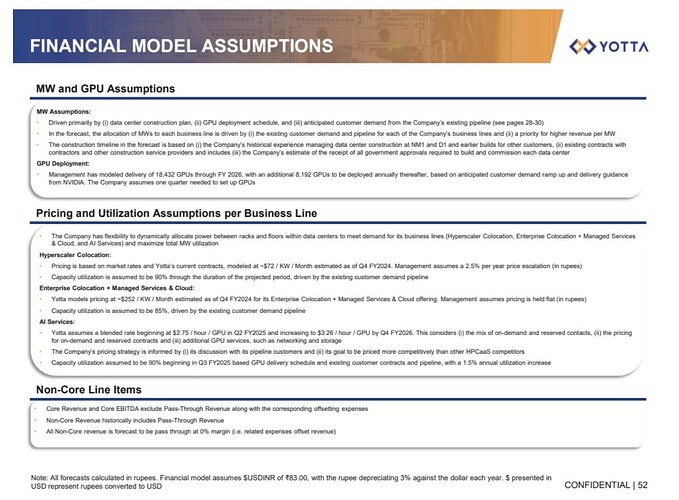

Yotta’s investor presentation gives a projected customer pricing info, which it says is below industry average. How does this compare to E2E assuming the service is similar?

If we go by spot instances, E2E would be cheaper at $2.02 for spot instances. On demand is more expensive at $5. Not sure what Yotta is talking about but E2E is definitely competitive on pricing.

here they have given some more details. its for “AI services”, blend of on-demand and reserved capacity and it was $2.75 in FY25Q2 increasing to $3.26 in FY26Q4.

Really want to find out if these are for the same services which E2E is providing!

source : https://www.sec.gov/Archives/edgar/data/1848437/000110465924074337/tm2417925d1_ex99-2.htm

BREAKING: Moneycontrol scoop confirmed Union Minister has just laid out the next phase of the India AI mission and the number of GPUs we have to build foundational models - a common compute facility for startups and researchers

“I am very happy to say, that against 10,000- gpus, we have actually empanelled 18,693 gpus”

“Our startups researchers, academia, they all want to access compute facility. that’s why in the India AI mission we have taken the common compute as the most important part of the mission”

12,896 are (Nvidia) H100s, 1,480 are (Nvidia) H200s – the most powerful GPUs. Overall net high end GPUs we have 15,000 GPUs in the entire process. To give context, DeepSeek was trained on 2,000 GPUs Ashwini Vaishnaw: This will be used for creating AI models, distillation processes, new algorithms Roughly, 10,000 GPUs (out of the 18,693) are available today.

“The second big mission of the IndiaAI mission was to develop an AI model. Our teams have been working with startups, academia”

“We have now created the framework which will be launched today. We are calling for proposals to develop our own foundational models, where the Indian context, the Indian languages, the culture of our country where the biases can be removed. Where the datasets, for our country, for our citizens – that process will start today”

“We believe that there are at least 6 major developers who can develop AI models in the 6-8 months on the outer limit, and 4-6 months on a more optimistic estimate”